Design, Build and Operate Docker-based Microservices

- juin 27, 2017

At the recent Austin DevOps Days Conference, Flux7 CEO Aater Suleman gave a talk on the “Top Ten Considerations When Planning Docker-based Microservices”. For those of you unable to attend the conference, you can listen to a replay of the presentation here. Or, read on as we share part of his talk focused on the synergy between DevOps, Docker and building microservices.

We are currently seeing three interesting, simultaneous patterns of activity. The result of the confluence of these patterns is that Docker-based microservices are something you will come into contact with, if you haven’t already. These three patterns include:

- Microservices, or applications coded as small, loosely coupled services that communicate with each other via lightweight (network) protocols, are quickly gaining popularity. (For a deeper dive on microservices, check out our resource page here.)

- DevOps adoption is growing across companies of all sizes and industries. For those unfamiliar with DevOps, it is an operational model focused on increasing agility and productivity of development and IT operations. (You can find more background on the Flux7 Enterprise DevOps Framework here.)

- Interest in Docker containers continues unabated. If you aren’t familiar with Docker containers already, they borrow from the concept of Linux containers while also containing the application code artifacts and other components around it. Docker containers contain their own file system and own process space; they are an isolated container where a single service or single code can take place. (Docker background can be found here.)

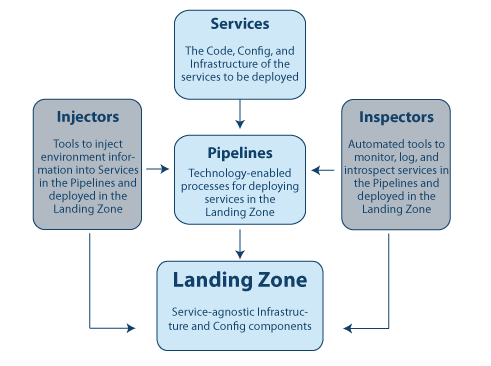

When you realize that Docker containers provide a layer of abstraction between infrastructure and the application, it becomes clear how Docker, microservices and DevOps are really quite synergistic. Our Flux7 DevOps experts have developed an Enterprise DevOps Framework (EDF) which stems from our work with more than 150 companies going through the DevOps transformation. Today we are applying this EDF (seen in the graphic below) specifically to Docker based microservices.

Docker-Based Microservices

Microservices are constructed in the same way that Docker naturally works. They are comprised of application code and a dockerfile that is carried with the application code. This dockerfile in turn contains the dependencies of the application. Then there are descriptors of the service itself, the orchestration fabric that defines how much CPU or RAM you want to give to a particular service, or how many containers of a particular service that need to run. Docker naturally requires (almost) all of this information to be kept with the service, in just the way you’d want it to be for your microservice to run at full potential.

Turning our attention next to pipelines, we can see that there is a distinct need for pipelines to deliver our Docker microservices into the Landing Zone. The beauty of it is that the job of the pipeline is very easy with containers. Without containers we find ourselves writing these very customized processes to land services into the landing zone. Without containers, they need to be written with different processes for Windows, Linux, Java, etc. However, with containers, you can abstract a lot of this information out and it makes it a more natural fit to this model.

In the DevOps Docker microservices model, your landing zone is service agnostic. (Note that this is basically what a Docker orchestration fabric like Kubernetes or ECS is.) The landing zone is a place where you can land containers and services and they just run. Being service agnostic is a boon as when you set the framework, you don’t have to be concerned about the service. And, when you write the service, you only need to know which orchestration engine you are going to run it on; the other specifics of the deployment become unimportant. Said another way, the service is agnostic to the landing zone and the landing zone is agnostic to the service which is critical to DevOps at large.

Next let’s discuss inspectors. Inspectors have two important jobs. The first is to inspect some important things that you would check regardless of the environment framework — e.g. conduct a pipeline security analysis. Second, inspectors take advantage of some really neat things you can do with containers from a security inspection standpoint. For example, before anything is deployed or run, you can run a static image analysis on a container to check for available software on your container. This secondary level of inspection gives you a whole new level of security and vulnerability analysis that’s not typically done in virtual machines.

Last in the EDF are injectors. Injectors insert important information about the landing zone into services. Yet, containers make this process really easy because the two are inherently decoupled. Really, the only major thing left to inject into your services are secrets, or things that allow us to authenticate one service to another, or allow a service to authenticate to an outside service or database. While we often use tools like Hashicorp Vault for to help us handle microservice secrets, there are several approaches to injectors.

At the end of the day, there are only a handful of important decisions to make, namely which:

- Orchestration engine to use

- Repository structure is good for our business need(s)

- Inspectors to use and what our pipelines should look like.

From these decisions, a solid structure for your DevOps Docker-based microservices appear. As almost everything can be agnostic to the service itself, we put a strong focus on the service agnostic components, allowing the service teams to focus on the services themselves.

A Real-World Example

We recently worked with a large West-coast healthcare organization who had a monolithic application they had written several years earlier in .NET. The development team had grown to over 50 people and the application was stifling innovation. No one person knew everything; no one had the full context of the app. As a result, it took a long time to onboard new developers. And so the decision was made to move to microservices. We began work with the organization to transition them to a DevOps, Docker based microservices environment using ten key considerations. For deeper reading on these, please read our blog. The result: the firm was able to grow its developer productivity and speed its time to market.

As current market patterns continue, it’s becoming clear that nearly everyone will need a solution for Docker based microservices. Yet, more than just technology, it is important to build a framework that supports your productivity, agility and security goals. Our goal at Flux7 is to help remove the mystery from DevOps based Docker microservices, turning it more into a puzzle where we help you define which pieces you need for for short- and long-term success.

For additional reading on how to succeed with Docker, DevOps and microservices — or all three in tandem — please subscribe to our blog below.

Subscribe to our blog